I wanted to expand on the practical and mathematical implementations of the cancellation rate I referred to in last week’s post.

Why cancellation rate is so important

As a preamble to the metrics, it’s useful to know what you’re measuring and why it’s vital.

[Cancellation rate] = [product utility] + [service quality] + [acceptable price]

I put in these particular elements because I did a study of the reasons people cancel at WP Engine, and these are the main reasons for cancellation. We log every cancellation — spending time running after folks to wring out the cause — so we can deduce exactly what we can do to prevent it in future. (Of course you should do this too and get your own data.)

These three factors are, of course, critical to a healthy, growing startup, and yet individually they’re impossible to measure as precisely and easily as cancellation rate. (I’ve never seen a graph of “usefulness of the product.”) So although it’s a single number combining several factors, and we know that averaging can obfuscate, I think cancellation rate is a good overall measure of how well the company is servicing its customers, and with tools like our detailed log you can still break apart the single number into concrete, actionable influences.

Beyond the analytical breakdown, I have an emotional attachment to this number, because whenever someone cancels I think about what had to happen to get them to this point, and it kills me. Of course people cancel only after they’re already a customer, which means they’ve already gotten through the barriers preventing them from buying: finding your website, not bouncing off the home page, understanding what you offer, deciding it’s something they want, researching the competition, signing up, configuring settings, entering a credit card number, rolling through tech support, and maybe even announcing to some Facebook “friends” that they just found something cool.

Barely anyone on Earth will ever power through this gauntlet. I turn myself inside out just to get a thousand people to bounce off the home page, praying that one makes it through to the end, like a frog laying ten thousand eggs hoping three survive long enough to do the same.

And then, after all that… they cancel! Son of a bitch! I have to know why and I have to do something about it!

So we’re going to measure this bastard, and we’re going to compute another useful thing from it, but it turns out to be harder than it first appears.

The many kinds of cancellation rate

A “rate” is a ratio of “something divided by time,” and it’s unclear what the something is and over how much time.

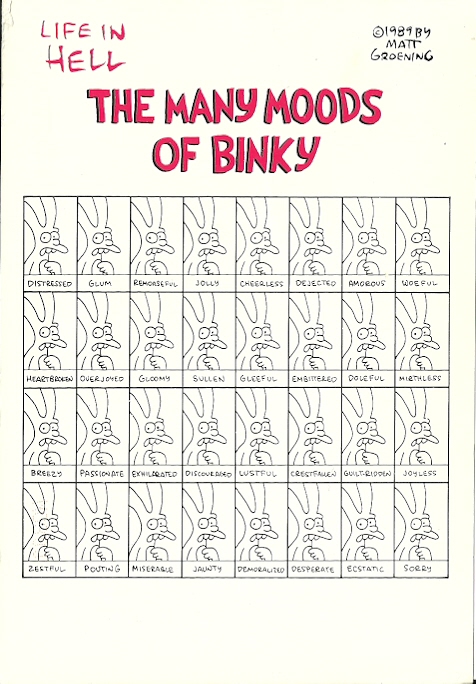

Unfortunately, like the many moods of Binky, there are many kinds of so-called “cancellation rate,” each subtly but critically different.

All of the following definitions are meaningful, but each measures something different. It’s useful to describe each so you can decide which (or several!) make sense in your case:

- Percentage of current customers who canceled in a given day/week/month. If this spikes, something just changed in how you’re behaving across the board. We had a spike in this metric in February at WP Engine when our Internet provider themselves had a datacenter-wide catastrophe which brought us down for twelve hours; of course not all spikes will have such obvious causes. We’ve also rolled out new initiatives designed to reduce this rate, and for the most part we’ve been successful. When selecting the duration component, it has to be long enough to get a stable number, but not so long that sharp changes in reality take weeks to register on the chart; I recommend using smallest possible time period without seeing the metric hit “0%.”

- Percentage of new customers in a given month which end up canceling at any later date. This is often called “cohort analysis,” where the new customers in January are tracked together as one cohort, new customers from February as another, etc.. The idea is to compare the behavior of each cohort against subsequent cohorts, but comparing similar periods in those customers’ lifespans. For example, how many who started in Jan cancelled in Feb compared to how many who started in Feb cancelled in Mar, and so on. This determines not whether your service is improving across the board, but whether new customers are getting a better new experience. This is especially useful if — like most companies — you have a higher cancellation rate with new customers than with old ones, and you (think you) have taken steps to improve that situation, and want to measure that progress.

- Absolute number of cancellations per week. This metric is supposed to increase over time at a growing SaaS company simply because there are more and more customers available to cancel. Still, you should also be getting better at preventing cancellations, or at least certain types of cancellations, such as crappy tech support or lacking a feature. Our cancellation log implicitly represents this metric because we review it weekly to look for trends. It’s not something we feel is also useful to graph because changes in the number aren’t necessarily actionable.

- Percentage of all customers who’ve cancelled over the lifetime of the company to-date. This is another way of measuring an “in/out” ratio — plotting relative number of new customers arriving versus customers exiting. For a company laser-focussed on accelerating the number of active users, it might be actually worth having high cancellation rates if it meant an even higher acquisition rate. This metric measures this relative change, so long it’s decreasing (or not increasing), you might be happy even if you’re not watching or optimizing for the cancellation rate in isolation. For a quality-service company like WP Engine a high cancellation rate is a sign of terminal cancer even if acquisition rates are also increasing, so this metric isn’t useful to us. It’s also not particularly useful when, again like WP Engine, the monthly cancellation rate is nice and low while new customer acquisition is healthy, because in this case — by definition — this metric diminishes asymptotically. Whenever you know for certain what a metric will do, it’s not useful or actionable to measure it!

- Cancellations as deactivations. For paid services like WP Engine, a “cancellation” is literally “the customer called to cancel, or clicked the ‘stop charging my card’ button.” For many consumer-Internet companies, most of your users aren’t paying, and therefore almost none will bother to take a cancel “action” even if they’re effectively cancelled. Rather, a lack of activity signifies an effective cancellation. In this case, you need to have a clear definition of an “active user” (e.g. “has logged in at least once in the past week”) and consider the user “cancelled” if they were active before but now are not.

- Revisionist history. What happens when a customer cancels but then returns? This is relatively rare in a company like WP Engine but is common for those consumer-Internet companies where cancellation is identical to passive deactivation, because a user might be reinvigorated by a newsletter or a tweet. In that case, you can decide that user didn’t cancel after all and update historical data in your charts. That’s perfectly fine, just as it’s perfectly fine that a customer today might become a cancellation tomorrow but that’s not yet in the chart either.

Of all these techniques, the one that is perhaps most important and useful for us at WP Engine — and likely you too — is cancellation rate by age. I call this a “continuous version of cohort analysis.”

You take the number of cancellations in a given time period (we use months), broken out by age (e.g. younger than 30 days old versus older), and then compute the “cancellation rate” as a percentage of all customers in that age group who cancelled. Like many companies, we’ve found that people who cancel soon after sign-up do so for very different reasons than those who cancel after a year, and we care about those two groups differently, and we act on them differently.

For example, quick cancels are often due to situations things like “I decided I didn’t want a blog after all” or even “My son used my credit card to set up my website but I’m not going to pay for hosting.” We probably can’t affect these circumstances much, or at least it’s not worth our time to try. So we’ll never get the short-term cancellation rate lower than a certain number — a number we can actually compute by marking these in the cancellation log and totting them up separately.

This is useful, not only because it sets a sensible target “floor” for our activities that do reduce short-term cancellation, but because it calibrates our expectations on how much front-end sign-ups we need to achieve our growth targets. It’s an “automatic drag” that we can just factor in to our projections.

On the other end of the time spectrum, every time we lose a long-term customer — which is thankfully almost never, knock wood — it’s cause for us to sit up and do a post-mortem. That rate had better be very close to 0%. If it ever pops it would be a “tools-down, everyone get on that right now” sort of problem.

I do worry about this happening as we grow, as should any company. It’s common knowledge that expanding companies have a hard time maintaining the level of service which earned them that growth. You sign up customers faster than your ability to hire quality people, so either existing people are stretched thin or you make the more fatal error of lowering standards to fill chairs. It’s harder to train people and keep your culture going, and those founders who were brilliant at seeking market-fit and constructing the foundation of a startup might be ill-suited for scaling that organization. It appears some of our WP Engine competitors are experiencing exactly this, right now. An increase in our long-term cancellation rate will be our first warning sign that we’re not handling the growth properly, so we watch it like a hawk.

LTV, my way

The other useful thing we do with cancellation rate is to compute LTV (customer LifeTime Value), but I don’t use the simplistic technique espoused by many others.

“LTV” means “the total revenue you’ll get from a customer over its lifetime.” For a simple subscription business model the formula is easy to write but hard to compute:

[LTV] = [monthly revenue] × [number of months in lifetime]

The hard part is “number of months,” because of course you don’t know how many months you’ll keep a customer until that customer leaves, and hopefully most haven’t left yet. Worse, if you’re like WP Engine you haven’t been around long enough to know how long most customers will stick around. For example, WP Engine is 15 months old and we’ve retained 95% of our customers, but the longest anyone has stayed is by definition 15 months, and because we’re growing, the average is probably around 6! But how long will all these folks stick around if we keep at it for another five or ten years?

P.S. Who cares about LTV? It’s the main way to determine whether your company is profitable and how much money you can (should?) spend on marketing, but that subject is covered well elsewhere so I’ll skip this and get back to geeking out over math.

It turns out you can compute the elusive “expected number of months” from your cancellation rate, even if you only have a few months of data to go on.

The typical formula is derived like so:

- Let p be the percentage of current customers who cancel in a given month. For example, if in March you had 200 paying customers and 10 cancelled, p = 0.05.

- In any month of N customers, Np will cancel, leaving N(1-p).

- Assuming you didn’t add any new customers, you would bleed customers according to that formula, every month. The customers remaining would be N(1-p) after the first month, then N(1-p)(1-p) after the second, then N(1-p)(1-p)(1-p) after the third, and so forth.

- In each month we get $R revenue per customer, so that means the first month we get $RN, then $RN(1-p), then $RN(1-p)(1-p), and so forth.

- To compute the “lifetime” amount of revenue these customers provide, you add up this infinite series, so: $RN + $RN(1-p) + $RN(1-p)(1-p) + $RN(1-p)(1-p)(1-p) + …

- Factor out the $RN, so you get: $RN × [1 + (1-p) + (1-p)(1-p) + … ]

- That bracketed infinite series can be rewritten as just 1/p.

- So total expected revenue is $RN/p.

- And the average expected revenue per customer is $R/p.

- And the average number of months per customer is 1/p.

So with our example of a 5% monthly cancellation rate, p = 0.05 so expected months is 20. If it’s a base-level WP Engine customer, that’s 20 months at $50 per month, so $1000 total LTV.

It’s nice when the math turns out simple, but that doesn’t mean it’s right.

It is right if your cancellation rate is indeed p every month, for every customer. But I just got through explaining that this isn’t at all the case. Newer customers tend to have high cancellation rates; older ones much smaller. In fact it’s not unusual for there to be a 10x difference between the short-term and long-term rates.

Therefore, I suggest a hybrid approach: First compute expected survivors over the short-term cancellation period, then use the “infinite sum” technique for the long-term customers.

Running an example will make this clear. Using the “cancellation rate by age” metric, suppose for the first 3 months of life your customers’ cancellation rate is 15%/mo, but after that the rate is 3%/mo.

So you retain 85% of your customers after the first month, 85% of those after the second, etc., for three months, for a retention after three months of (0.85)3 = 0.61.

I then completely ignore the revenue received by those 39% of customers who stuck around for only a few months. Sure they gave us a little money, but surely it’s negated by the time-cost of messing with them over tech support and processing cancellations and refunds. I want LTV to be a conservative metric, so I ignore this revenue.

Now, with 61% of my original customers remaining, it makes sense to use the formula above to predict they’ll stick around for another 1/0.03 = 33 months, and since they’ve already made it through 3 months, that’s a grand total of 36 months.

So, for a brand new customer, there’s a 61% chance they’ll deliver 36 months’ of revenue, and 39% chance I get nothing (significant), for an expected 0.61 × 36 = 22 months on average.

To demonstrate why this method, while more tedious, is superior than the simplistic one, observe that if you ignore age groups, this same company would appear to have a 6% cancellation rate (which conceals the interesting customer behavior) and by the usual formula would have expected months of 1/0.06 = 16 months, which is incorrect by almost 40%. That’s a lot of error!

For those of you who like formulas, we can compile the example down to variables:

- r = short-term cancellation rate (e.g. 0.15)

- p = long-term cancellation rate (e.g. 0.03)

- s = number of months in the “short-term” age group (e.g. 3)

- (1-r)^s × (s + 1/p) = expected months

Yes, this is how I compute LTV at WP Engine, except worse! Because we’ve decided there’s actually three distinct age-groups, plus we treat coupon-based customers separately because have a significantly higher cancellation rate, often for reasons that have nothing to do with our behavior.

As a final note on LTV, I personally don’t care much about lifetime revenue, and prefer to compute lifetime operational profit, meaning the net revenue after taking out known costs of service. In the case of WP Engine, we knock off 2% for credit card fees and a certain amount for hosting and bandwidth costs.

Once you get down to that, you can easily answer questions like “how much money can we spend to acquire a customer.” For example, if LTV (net) is $500, it’s a pretty easy decision to spend $50 or even $150 to acquire a customer. That might mean AdWords, tradeshows, give-aways, coupons, affiliate programs, or anything! Extremely useful for confidently measuring marketing campaigns against profitable customer growth.

I guess that last sentence sounded like abstract business-speak drivel. But it’s true, and getting a solid handle on your cancellation rate is the key.

Besides, mathematical formulae aside, it’s perhaps the single best and easiest measure of whether you’re actually delivering on your promises to customers.

And what’s more important than that?

Did you make it all the way to here? Let’s continue the tips and tricks in the comments section.

39 responses to “Deep dive: Cancellation rate in SaaS business models”

I’d be interested to see the comparison between _enterprise_ SaaS and normal $39.95/month SaaS.

The churn on enterprise SaaS would presumably be incredibly low, because once a company invests in integration, workflows, training, etc. for a particular system, it’s going to be very hard to switch.

Great point, but not necessarily. For HubSpot, for example, they have plenty of (100s, if not 1000s) enterprise-sized customers, and yet measuring CHI (which is their prediction of customer happiness, defined as the likelihood that they’ll cancel) still drives almost everything that every employee does.

If the contract is yearly instead of monthly, you could make the argument that churn is necessarily over a longer timeframe, yes. But that doesn’t mean the churn is immaterial! It means that straight up “cancels” — i.e. customer stops paying — isn’t the right metric for you. Rather, you need something more like CHI — something you can measure monthly or even daily which tells you essentially the same thing, so you can react faster and keep those customers.

Because the flip-side to your argument is that enterprise customer acquisition is also extremely expensive, so you HAVE to get more than a year of service out of them, so cancellation is still vital.

Great Post!

You might want to check out my posts on Renewal failures in subscription SAAS business at:

http://www.sundarsubramanian.com/post/9327457224/adventures-in-online-subscription-business-part-1

http://www.sundarsubramanian.com/post/9367177650/adventures-in-online-subscription-business-part-2

Didn’t you say that cancellation rates were not the most important thing in “The full story of “the one important thing” for startups”?

https://blog.asmartbear.com/one-priority.html

BTW, your blog’s home page takes *forever* to load. Can’t you get the guys at WP Engine to improve that…? :-)

Yes, I said that for us it’s not the #1 thing. But it’s still important!

Glad to see your response. I agree.

Actually when I read your other post I saw the logic of it but it left me with a pit in my stomach that made me feel bad. While numerically more Google AdWords seems to make more logical sense, working on cancellation rates makes more intuitive sense to me. Both are important but I think the latter is critical. Glad to see you didn’t toss it out with the bathwater.

Here’s a better way to put it:

While cancellation rate stays low, signups are the most important thing.

If we see a rise in cancellations, that means the company is literally broken, which means growth is no longer important.

I’m going to find a way to apply this to my semiconductor business IF IT KILLS ME.

We measure things by the socket. (specific customer, specific pn#.) Our cancellations are usually driven by customer’s product lifecycles and/or end market success, plus our $R is usually a percentage of the total. (A commodity split btw 2-4 suppliers.)

And we’ve got a significant leadtime between “signup” (awareness, samples, price negotiation, ….) and 1st production shipments. Yes, I know. The measurements are more complicated.

My point? The model works – if you do a decent job of segmenting the pretenders from the winners aka “cancellation by age”.

Very nice post. Special props for the use of Binky.

[email protected]

Good for you! Check out Dharmesh Shah’s Business of Software talk from 2010 about “CHI.” They have a way of trying to predict “customer happiness,” meaning the % chance that they’re going to cancel, based on correlating various factors.

The result is that far before they actually technically “cancel” you can tell it’s not looking good, and either take an action to fix or at least plan around it, both for forecasting and the amount of time you spend with them.

You need to look into a great statistical simulation method called Monte Carlo. I won’t get into the details here as you can just search and come up with thousands of pages of info, but it’s greatest benefit (in my mind) is getting out of the precise number mindset.

At the end of the analysis it will provide you with a probability for each number instead of an exact number. For example, instead of saying that LTV will be $1,000 it will say:

10% probability of $10

20% probability of $200

50% probability of $1,000

20% probability of $1,200

10% probability of $2,000

…or something like that. Much more useful in my mind. Let me know if you’re interested in this concept and I could whip up an example for you based on your real numbers.

Best,

LB

Yup, I’m familiar. It’s a great idea — would be neat to have a web tool where you enter in a few parameters and you get the distribution instead of a number.

Note that it’s ALSO useful to have a single number when you’re combining with other things, to be simpler.

Have you performed cohort analysis by “why the customer signed up with us?” If you do decide you can afford give-aways, it may impact how long they stick around. The phone companies know this, as they lock in customers to pay for the give-away (discounted new phone, etc.).

Terrific idea. We do segment “coupon” from “non-coupon” but your idea is better.

Big business may have the cash, but small businesses have more votes.

It is important that small business owners counter these financial

contributions to Senators and Representatives by making sure that their

voices are heard. Write your Congressman or Senator and tell them to

support small businesses.

Cigarettes

Thanks for the great post!

You’ve mentioned 3 stages of churn rate, but I was wondering, if you have 15months of data, couldn’t you use 15 stages? Your churn rate will probably converge after some months anyway, and you could use the last rate as the long term churn.

Does it sounds correct? Too complicated?

You could but it didn’t change the numbers much.

Usually there’s not that many forces dictating cancellation, and your model shouldn’t be more complex than reality. That is, people cancel after 8 months pretty much at the same rate and reason as after 9.

—

Jason Cohen

http://blog.ASmartBear.com

@asmartbear

How many month’s data should one take to compute an accurate picture of LTV and LT? 1, 3, 6 or more?

I would plot it at least monthly, more often if you have more transactions, and watch it over time.

The trend is often more telling than the absolute number.

Thank you for the very comprehensive post! I truly think it’s important to understand the cancellation rate metric and how to calculate it!

Creating a cohort analysis based on “time to cancel” can indeed allow focusing on long-term users, however I believe cohort can also be measured based on engagement level.

I’ve quoted your post and added my thoughts in Totango’s blog: http://blog.totango.com/2011/10/3-ways-to-do-cohort-analysis-on-saas-churn/

Excellent article, thanks for sharing all this with the community! I had a tough time finding how others compute LTV.

Grant

Thornton Georgia is a team of experienced public accountants and auditors,

specialist advisers in finance, business and management, as well as tax and

legal advisers working at Tbilisi office.

An amazing panel discussion on Cloud taking place in Toronto next month: http://www.eventbrite.com/event/2218230788/efblike

Jason, great post, very comprehensive.

Question: Have you trended your findings/ results over time? Are the number abetting worse of better, as you take propriate action(s)?

Thinking from a future protectionist viewpoint, I would want to know the underlying root causes of ‘why’ my customers were cancelling, and build a top 10 list with an appropriate prevention and recovery list of tactics for each.

Applying the 80/20 rule to these lists, I would then build in tactical ways for the customer to experience these actions before cancelling (albeit in a different tense since they wouldn’t have actually cancelled yet!)

One final consideration. Gym Memberships. Check out search archives of Harvard Business Review. They did an awesome study on why people cancel and one key point was ongoing usage of the service, caused by psychological barriers that ‘creep’ over customers, over time. So, Gyms focussed on the enjoyment factor of the experience: free coffee, newspapers, handy health tips, humorous posters, attractive assistants etc etc.

What can SaaS providers do?

Splash pages with cartoons on logout? Free Apps, Special reports via email, customer ezine tackling other challenges, links to value add… The list is huge. Surprise and fun is also important. Built on tip top support and reliability.

Any thoughts on these and your original post??

Robin.

On trending over time, you *must* do that. The trend is more important than the actual number. In fact different businesses will natural have different numbers — it’s whether it’s not growing (or, if you’re actively trying to affect it, if it’s shrinking) that’s most interesting.

Jason, I agree with this thoughtful and thorough analysis, thank you for your insights.

I would like to contribute one additional layer of analysis for determining how much to invest in customer acquisition. When acquiring a customer to a SaaS offering, you’re paying the full cost of acquisition upfront (the Google CPC, the trade show fee, etc.) only to receive revenues from the customer in monthly increments over their entire lifespan.

To determine an appropriate acquisition cost we must look at the present value of the future revenue. Using your simple example of $50 per month over 20 months yields $1,000 nominally. But the present value of the $1,000 may only be $900 or $950. This present value of customer revenue is the number you should use to determine the appropriate investment in customer acquisition.

That’s true. Although nowadays interest rates are such that you probably can ignore NPV.

I think you need CAC very much less than LTV, so a small change in LTV shouldn’t change your mind.

Alternately, this is a good argument for getting annnual prepay, even providing a discount for it. The amount of te discount is of course your NPV calculation!

—

Jason Cohen

http://blog.ASmartBear.com

@asmartbear

A smart bear indeed! Thank you for this great post.

I understand cancellation rates. Can you share your thoughts on how life time value can be computed if the product is not a periodic payment? We at http://graduatetutor.com/ provide private tutoring for MBAs. So by definition it is personalized and students can use an hour a week, or a few hours a day and stay for just a day or a few weeks or months/years too.

No matter the payment system, you’re answering this question:

What is the expected value for total revenue?

“Expected value” is a statistical concept which could be loosely interpreted as “weighted average.” It’s not the “most likely value” but rather the average, weighted by the probability of each value.

So a rough way to do it would be to *convert* your complex thing into an average monthly rate. For *each* customer you compute:

equiv_monthly_rate == total_revenue / total_months

Then you could average those rates to get your standard monthly rate, then use the logic from this article.

Of course using averages like this covers up important data, like how spiky it might be, or how certain customers might take hours consistently and others don’t, and all sorts of other things.

But it could at least get you a ballpark answer, and something you could track over time.

Any opinion about billing contributing/causing some cancellations? What I am asking is, have you analyzed, say, the model where a credit card is on file and monthly monies are just charged as opposed to a quarterly or annual large invoice, where customers have to be notified that payment is due and they then realize now is the time to cancel if I plan to? They might just keep paying if a credit card or direct debit were automatically taking the money without any red-alerts sounding ?

To bring it back to cancellation rate calculations, I am curious if anyone has analyzed how billing models affect cancellation rates, and if one model works better than another for retention. My company now requires annual commitments for its SaaS service, but you can pay monthly with a credit card on file, quarterly via credit card, wire transfer, or invoiced payment, or annually (same options, with a deep discount for this annual prepayment). My suspicion is that when we give a company 30 days warning that their annual payment is due, they start to really look at the service hard and decide at times that this is the time to get out from under. My last company only did credit card or direct debit, unless the customer was very large and then was willing to manually billed them.

That’s a great question, and no I don’t have data of my own.

It’s probably true that the smaller the payment and the less fuss is made over it, the less likely the customer is to cancel.

From a financial perspective that’s important because that’s money in your pocket.

From a “healthy business” perspective that’s NOT good because it’s masking the truth, which is that people don’t really need your stuff, which is a more fundamental problem.

I agree that customers cancelling yearly prepayments/commitments brings a very valuable signal to the company. Did they not renew because price is too high in comparison with competitors? A lack of new features compared to competitors? Customer service quality has gone down? Etc.

In other words, you’re gaining valuable information that you would otherwise not receive from customers who don’t think twice about a perceived lower cost (because of lower monthly payments). Customers will do work for you that you should be doing anyway (reviewing price and value compared with competitive options), so make sure to capture the reason for these cancellations very diligently.

It depends. Annual commitments definitely reduce churn when you have an average lifetime less than 12 months. However, this can and does affect signups…some just want to use the service for a short time not a long time. That’s where it gets difficult to continue to show value. There are so many ways to manipulate these numbers. Starting from how and how often you communicate with them. This is typically a big trigger because you may be reminding them to quit. What’s a better strategy is to automatically renew and require opt out to unsubscribe.

Hi Jason,

Some interesting stuff here. I’ve done some similar work on retention and the marginal advantage of keeping SaaS customers longer than average. Take a look and let me know what you think.

http://centriclogicblog.wordpress.com/2012/10/02/why-saas-companies-need-to-focus-on-retention/

Rgds,

John

Thanks Jason. Will be something I refer to when I launch my own SAAS :)

Hi Jason, thanks for the great article! You mentioned that at WP Engine, you use three distinct age groups. I too am trying to calculate tenure for cohorts with three different “stages”, and wanted to check that my math is right. Would the formula for lifespan of a cohort with three different age groups be (1-r)^s * (1-x)^n * (1 + 1/p), where n = number of months in the “medium term”age group and x is the churn rate of that group?

Would really appreciate any guidance! Thanks!

Yes, except the last is just 1/p without adding one.

Hey Jason, thanks so much for the quick reply. I thought about it more and after doing some math, I think it should actually be (prob reaching month s)*(s) + (prob reaching month n)*(n) + (prob reaching month n+1)(expected lifetime for month n+1 onwards). This translates to s*(1-r)^s + n*(1-r)^s * (1-x)^(n-s) + (1/d)*(1-r)^s * (1-x)^(n-s) * (1-d), where n = number of months in the medium-age group, x is that group’s churn rate, and d is the churn rate of the oldest group. An example equation would be (1-0.3)^1 + 3(1-0.3)(1-0.2)^2 + (1/0.1)*(1-0.3)(1-0.2)^2(1-0.1)…does this make sense? Thanks so much again!

It hard to folllow. I think you might be skipping the fact that “chance to get to n” is really “chance to get to s” times “chance to get to n-s” in the first part, though maybe it’s right in the second example.

Best might be to just model every month in a spreadsheet, one per line. Easier to think through what is happening each month, and tweak parameters or the model.